Slicing and QoS

Overview

Network slicing enables sharing the same physical infrastructure between independent logical networks, each one targeting different use cases while providing isolation guarantees. Slicing permits the implementation of tailor-made applications with Quality of Service (QoS) specific to the needs of each slice, rather than a one-size-fits-all approach.

SD-Fabric supports slicing and QoS using dedicated hardware resources such as scheduling queues and meters. Once a packet enters the fabric, it is associated with a slice ID and traffic Class (TC). Slice ID is an arbitrary identifier, while TC is used to determine the QoS parameters. The combination of slice ID and TC is used by SD-Fabric to determine which switch hardware queue to use.

We provide fabric-wide isolation and QoS guarantees. Packets are classified by the first leaf switch in the path, we then use a custom DSCP-based marking scheme to apply the same treatment on all switches.

Classification can be achieved for both regular traffic via REST APIs, or for GTP-U traffic terminated by P4-UPF using PFCP integration.

Traffic Classes

We support the following traffic classes to cover the spectrum of potential applications, from latency-sensitive to throughput-intensive.

Control

For applications demanding ultra-low latency and jitter guarantees, with non-bursty, low throughput requirements in the order of 100s of packets per second. Examples of such applications are consensus protocols, industrial automation, timing, etc. This class uses a queue shared by all slices, serviced with the highest priority. To enforce isolation between slices, and to avoid starvation of lower priority classes, each slice is processed through a single-rate two-color meter. Slices sending at rates higher than the configured meter rate might observe packet drops.

Real-Time

For applications that require both low-latency and sustained throughput. Examples of such applications are video and audio streaming. Each slice gets a dedicated Real-Time queue serviced in a Round-Robin fashion to guarantee the lowest latency at all times even with bursty senders. To avoid starvation of lower priority classes, Real-Time queues are shaped at a maximum rate. Slices sending at rates higher than the configured maximum rate might observe higher latency because of the queue shaping enforced by the scheduler. Real-Time queues have priority lower than Control, but higher than Elastic.

Elastic

For throughput-intensive applications with no latency requirements. This class is best suited for large file transfers, Intranet/enterprise applications, prioritized Internet access, etc. Each slice gets a dedicated Elastic queue serviced in Weighted Round-Robin (WRR) fashion with configurable weights. During congestion, Elastic queues are guaranteed to receive minimum bandwidth that can grow up to the link capacity if other queues are empty.

Best-Effort

This is the default traffic class, used by packets not classified with any of the above classes All slices share the same best-effort queue with lowest priority.

Classification

Slice ID and TC classification can be performed in two ways.

Regular traffic

We provide ACL-like APIs that support specifying wildcard match rules on the IPv4 5-tuple.

P4-UPF traffic

For GTP-U traffic terminated by the embedded P4-UPF function, selection of a slice ID and TC is based on PFCP-Agent’s configuration (upf.json or Helm values). QoS classification uses the same table for GTP-U tunnel termination, for this reason, to achieve fabric-wide QoS enforcement, we recommend enabling the UPF function on each leaf switch using the distributed UPF mode, such that packets are classified as soon as they enter the fabric.

The slice ID is specified using the p4rtciface.slice_id property in

PFCP-Agent’s upf.json. All packets terminated by the P4-UPF function will be

associated with the given Slice ID.

The TC value is instead derived from the 3GPP’s QoS Flow Identifier (QFI) and

requires coordination with the mobile core control plane (e.g., SD-Core). When

deploying PFCP-Agent, you can configure a static many-to-one mapping between

3GPP’s QFIs and SD-Fabric’s TCs using the p4rtciface.qfi_tc_mapping property

in upf.json. That is, multiple QFIs can be mapped to the same TC. Then, it’s

up to the mobile core control plane to insert PFCP rules classifying traffic

using the specific QFIs.

Configuration

Note

Currently we only support static configuration at switch startup. To add new slices or modify TC parameters, you will need to reboot the switch. Dynamic configuration will be supported in future SD-Fabric releases.

Stratum allows configuring switch queues and schedulers using the

vendor_config portion of the Chassis Config file (see

Stratum Chassis Configuration). For more information on the format of

vendor_config, see the guide for running Stratum on Tofino-based switches

in the Stratum repository.

The ONOS apps are responsible of inserting switch rules that map packets into different queues. For this reason, apps needs to be aware of how queues are mapped to the different slices and TCs.

We provide a convenient script to generate both the Stratum and ONOS configuration starting from a high-level description provided via a YAML file. This file allows to define slices and configure TC parameters.

An example of such YAML file can be found here here.

To generate the Stratum config:

$ ./gen-qos-config.py -t stratum sample-qos-config.yaml

The script will output a vendor_config section which is meant to be appended

to an existing Chassis Config file.

To generate the ONOS config:

$ ./gen-qos-config.py -t onos sample-qos-config.yaml

The script will output a JSON snippet representing a complete ONOS netcfg file

with just the slicing portion of the fabric-tna app config. You will

have to manually integrate this into the existing ONOS netcfg used for

deployment.

REST API

Adding and removing slices in ONOS can be performed only via netcfg. We provide REST APIs to: - Get information on slices and TCs currently in the system - Add/remove classifier rules

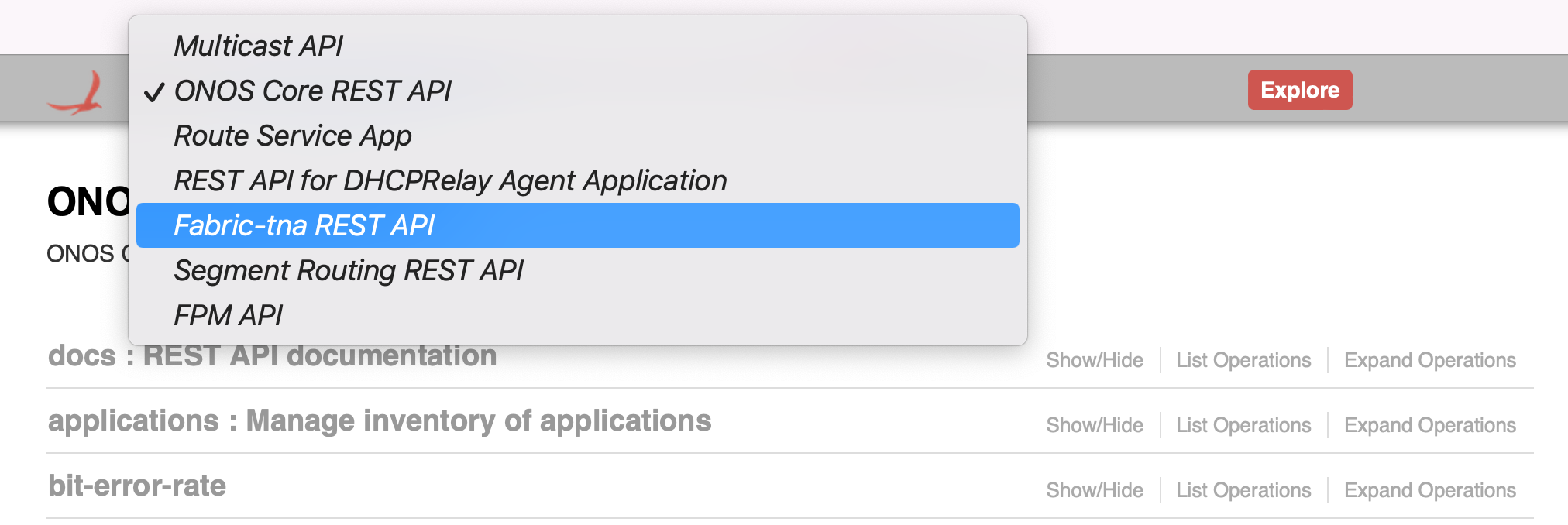

For the up-to-date documentation and example API calls, please refer to the

auto-generated documentation on a live ONOS instance at the URL

http://<ONOS-host>>:<ONOS-port>/onos/v1/docs.

Make sure to select the Fabric-TNA RESt API view:

Classifier Flows

We provide REST APIs to add/remove classifier flows. A classifier flow is used to instruct switches on how to associate packets to slices and TCs. It is based on abstraction similar to an ACL table, describing rules matching on the IPv4 5-tuple.

Here’s an example classifier flow in JSON format to be used in REST API calls. For the actual API methods, please refer to the live ONOS documentation.

{

"criteria": [

{

"type": "IPV4_SRC",

"ip": "10.0.0.1/32"

},

{

"type": "IPV4_DST",

"ip": "10.0.0.2/32"

},

{

"type": "IP_PROTO",

"protocol": 6

},

{

"type": "TCP_SRC",

"tcpPort": 1000

},

{

"type": "TCP_DST",

"tcpPort": 80

},

{

"type": "UDP_SRC",

"udpPort": 1000

},

{

"type": "UDP_DST",

"udpPort": 1812

}

]

}